After my original post about Facebook’s online conflict resolution system, I received some requests for more information about the company’s research and the template-based self-help system they now use to resolve user disputes. This post will expand upon the research performed for Facebook by a team of social scientists from Berkeley, and present some examples of the conflict resolution templates which are now in place on the website. The source of this information is the video “New Tools to Understand People” which is available here. Tomorrow, I will follow up with a graphic demonstration of how this system works on the current Facebook site.

In the past, Facebook users who objected to another user’s content had a single tool to request help for their dispute: they could report the content to Facebook. However, the company would only act in the uncommon cases where content actively broke a company rule. Therefore, few users with a complaint received satisfaction, and the objectionable post, whether an unflattering photo or an annoying political opinion, stayed up. For these interpersonal conflicts, the company has shifted its approach from users who report their objection to Facebook’s central authority, over to users who manage their own conflict resolution by working directly with the other user. The Berkeley team developed a research method to try out new tools on the website, get real-time feedback from users, measure those responses and adjust the tools to fit user needs.

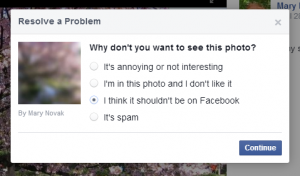

The basic idea of the templates is to give users who find a post objectionable a few simply-worded options to choose from. Each question leads to further questions meant to help users articulate their feelings. At the end of this series of choices, Facebook offers several choices for action, including a sample message that follows a template according to the options chosen. The user can then choose to send the sample message, tailor the message further and send it, or do nothing.

The Berkeley team stressed that they aimed for a process that allowed for real-time feedback, while users were still experiencing their emotions, rather than sending a survey days later once they were more distant from the experience. For every question in the testing phase, users could choose “something else” and elaborate on how their feelings differed from the options offered. The wording of choices was of paramount importance. In the first iteration, the three choices offered for why a user didn’t like a post were “It’s embarrassing,” “It insults me by name,” and “It’s threatening.” 85% of users either chose “Something else” instead, or dropped out of the process. For whatever reason, these answers did not meet most users’ needs. (The most successful phrasings, currently in use on the site, can be seen above and in a follow-up post.) As future iterations of the project continued, the researchers used these results to continue to test and refine their language to find the most effective phrases. Subtle differences could make some wordings more effective than others. The “please” in ‘Would you please take this down?” was more successful than the phrase “Would you mind taking this down?” This method of testing does not address why users prefer one phrase over another, but it responds to user behavior to drive the phrasing towards the most effective language.

Another finding was that the response options the site offered needed to be culturally specific. There were no universal rules for what types of content users might object to, so it was important for the conflict resolution system to be tailored to each culture’s needs. In India, for example, people often find photos that mock celebrities to be problematic. This is very different from prevailing US opinion. The tools developed were market-specific for each geographic area and its culture.

The ultimate result of the new tools has been that the rate that users message each other to resolve conflicts, and the rate that creators take down their content have each multiplied several times over. Comparing the new tools to the old reporting process, users report feeling approximately 15% better toward the person they have an issue with as a result of using the new tool.

In my next post, I will demonstrate how the templates work when one user objects to another user’s photo, and how the templates help users articulate their feelings and also choose which of several actions they may take.

What Facebook is doing is quite fascinating in several respects. In the straight ADR field itself it is interestingly focused on “users” directly [no intermediaries, lawyers, etc.] to “work things out” – obviously lowering cost and speeding resolution. Further, the “refining process” and recognition of differenct “cultural” environments should (and apparently is) improving the “product” constantly. Application to customer service in general (which is really what Facebook is doing itself, but its “product” is as intermediary of users) is obvious. The “serious” social scientists should also find it of interest. Mary, you and RSI do a great job – and this is one example – of allowing interested ADR people, but those not fully “in the field,” to access this kind of information and “what’s happening.” Keep up the great work!

Thanks very much, Kent. While it’s not a perfect correlation, I hope that the possible implications for other large, impersonal institutions including the court system are somewhat visible, as well. I think we may eventually find there’s a lot of cross-pollination between traditional ADR systems and online DR systems like this one.

[…] The blog of Resolution Systems Institute « How Facebook Designed Its Template-Based Online Dispute Resolution System […]